Chaos Engineering concept invented by Netflix to learn about how our system will behave with potential failures before they become outages. The goal of Chaos engineering is to discover the hidden failures/hazards that are not necessarily present when you promote the system officially into production, and in case of problems, they are within the controllable range.

In simpler terms, chaos engineering is used to inject various types of faults into our system to identify vulnerabilities and improve the resilience of a production system.

History of Chaos Engineering

In the initial days, Netflix was running its servers in their own computer room, but this approach was leading to a single point of failure and other problems.

In August 2008, a database problem caused three days of downtime, during that time no streaming service was available on Netflix. As a result, Netflix migrated their services from vertically scaled infrastructure to horizontally scalable, highly reliable, distributed systems (Amazon Web Services) in 2011.

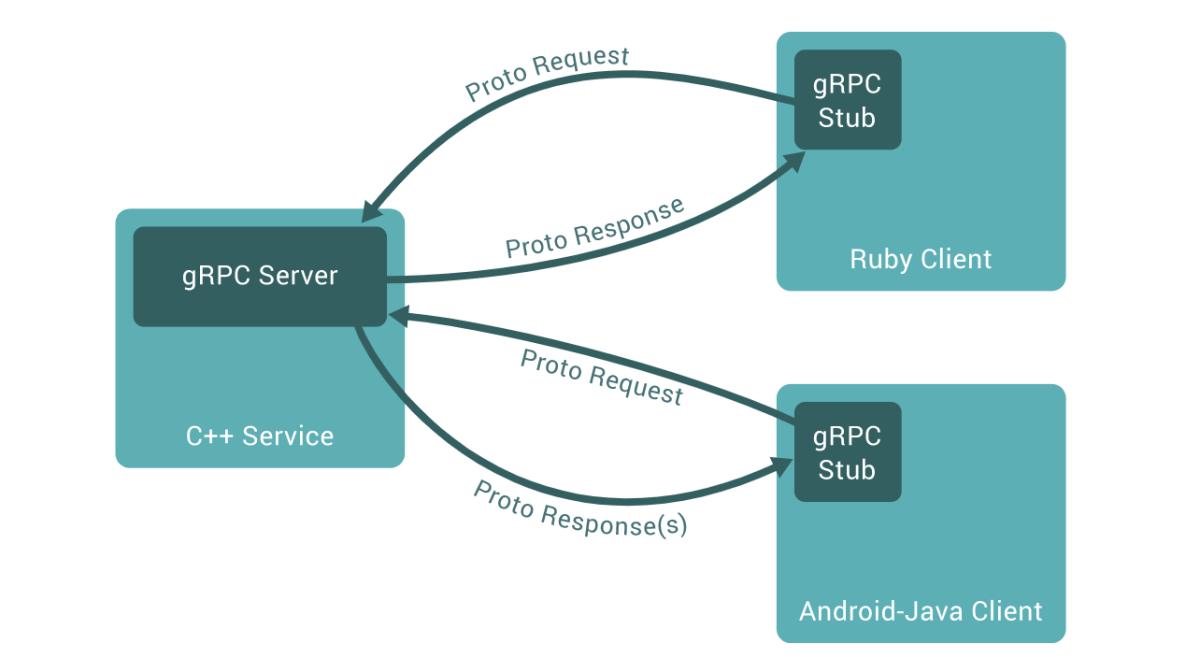

Even though the infrastructure can handle the new distributed architecture which consists of roughly 100+ microservices, it also introduces complexity and required microservices to be more reliable and fault-tolerant.

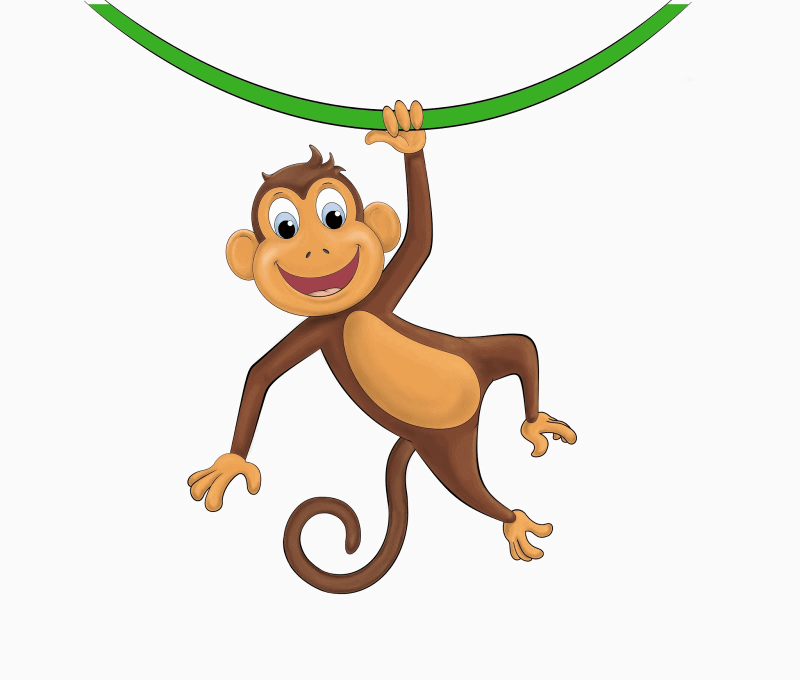

It opened a new opportunity for the Netflix engineering team to come up with “Chaos Monkey” which can cause failures at random locations throughout the system and it was a breakthrough to test one or more service reliability and recovery mechanism of the production environment.

With the emergence of Chaos Monkey, a new discipline was born named Chaos Engineering, described as per Principles Of Chaos Engineering website.

“a discipline that conducts experiments on distributed systems with the goal of establishing confidence in the system’s ability to withstand turbulent conditions in a production environment.”.

In 2012, Netflix open sourced Chaos Monkey. Today, many companies (including Google, Amazon, Nike, etc.) use some form of chaos engineering to improve the reliability of modern architectures.

Refer: What is DevOps?

Principles of chaos engineering

The below principles describe the ideal way to implement the experimental process and the ideal way of matching these principles can enhance our confidence in large-scale distributed systems.

Understand the state of the core indicators when they are stable

The chaos engineering exercise requires indicators that compare what is expected to happen and what will actually happen to measure the state of the system when it is stable.

Indicators can be of two types. i) System indicators and ii) business indicators.

System indicators are nothing but

- CPU load

- Memory usage

- Network I/O

- etc.

Business indicators is nothing but, check whether

- Users kicked out of session?

- Can users able to operate key functionality in the website? Say, for example, payment, checkout or adding shopping carts, etc.

- Highly delay in loading some of the services?

Realtime-Example: Increase the number of requests to a particular service, check whether system indicators maintain its steady state or broken? When the system indicators cannot quickly restore the steady-state requirements after the test is completed, the system can be considered unstable.

Vary Real-world Events

Every system from simple to complex will encounter various problems, sporadic events, and conditions as long as it runs for a long time. Sometimes it might result in hardware failures, software defects, dirty data, spikes, etc..

Below are the possible conditions, not the entire list :), but the common ones which can face in the real world usecases,

- Hardware failures

- Functional defect

- Downstream dependency failure

- Resource exhaustion

- Network delay or isolation

- Unexpected combination calls between microservices services

- Byzantine failure

Exercise in the production environment

We can have the environment similar to the production environment as much as possible. The close to the production environment will give the greater confidence in the chaos engineering experiments.

Please avoid doing the experiment in the development or sandbox environment or QA environment. It is not considered the best choice.

Continuously experiment like continuous integration

The chaos experiments can be kickoff with manual procedures and need to automated and normalized to reduce the cost of the experiment.

Minimize the explosion radius

When performing the chaos experiment, have the scope defined to control the risk. Otherwise, we have to be prepared for the big impact, For Example, The entire service can be down and all the users will be impacted at the same time.

We can also consider using the canary method, which is similar to A/B testing and the blue/green method. In practicality, the experiment can be done on a small set of users and if the experiment is ruined only a small set of users will be affected and the loss will be in control.

Chaos Engineering Tools

Some of the most notable tools used widely across industry. For more tools info you can refer following following website

- Blockade

- Byte-Monkey

- Chaos-lambda

- Chaos Monkey

- ChaoSlingr

- Chaos Toolkit

- Drax

- Gremlin Inc

- Kube-monkey

- Orchestrator

- Pumba

- Pod-Reaper

- PowerfulSeal

- Toxiproxy

- MockLab

- Muxy

- Wiremock

- Namazu

- GomJabbar

Netflix Experience/lessons learned while performing Chaos Engineering

- Define a goal: Define a clear and measurable goal before performing chaos engineering

- Embrace Failures: In short, the core of chaos engineering is to expose the problems, rather than creating problems in the existing system. Establish a technical culture that faces failure and embraces failures.

- Continuous Improvement: Start with small experiment scenarios and gradually broaden your scope of experiment with respect to the system. Prep work is more important than implementation which will also help you to contain the risks on a smaller level.

Chaos Engineering Survey

The 2021 survey from a (Gremlin) chaos engineering company, with major contributions from Grafana Labs, Dynatrace, Epsagon, LaunchDarkly, and PagerDut has shown frequent use of Choas engineering. The survey highlighted a tipping point in cloud computing, where 60% said they run major of their workloads on public cloud, AWS grabbing 40%. with GCP, Azure, and on-Premises following around 12%. More info can be referred to here

Key Findings

- Heart of the section: Teams who frequently run Chaos Engineering experiments have >99.9% availability

- 23% of teams had a mean time to resolution (MTTR) of under 1 hour and 60% under 12 hours

- Network attacks are the most commonly run experiments.

- 60% of respondents run at least one Chaos Engineering attack with their enviroments.

- 34% of respondents run Chaos Engineering experiments in production. Otherwise, chaos engineering is reserved for development or staging systems.

Conclusion

Chaos engineering is an approach to identify the failures and issues before they become outages and it does not bind any tools or technologies. Increased availability and decreased Mean time to Recovery (MTTR) are the two most common benefits of Chaos Engineering.

However, it is recommended to use matured open-source/commercial tools by considering factors such as fault tolerance, development costs, operation, and maintenance efficiency, etc. AWS came up with its own version of Chaos, called Fault Injection Service.

In conslusion breaking things on purpose will give an opportunity to build a resilient production system. Netflix learned this important lesson: avoid failure through continuous failure.

Reference

This article is inspired from the below websites

1. https://github.com/dastergon/awesome-chaos-engineering

2. https://principlesofchaos.org/

3. https://www.gremlin.com/community/tutorials/chaos-engineering-the-history-principles-and-practice/

4. https://www.oreilly.com/library/view/chaos-engineering/9781491988459/ch01.html