In this article, I will take you through step by step on how to easily install Hadoop 3.3.0 on a mac OS – Big Sur (version 11.2.1) with HomeBrew for a single node cluster in pseudo-distributed mode.

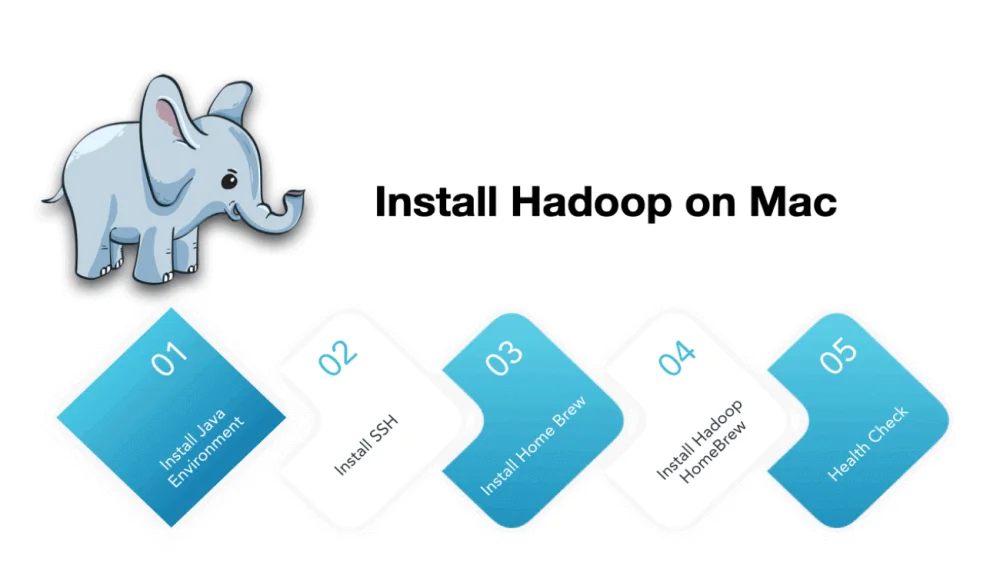

Install Hadoop on Mac

The installation of Hadoop is divided into these steps:

- Install Java environment

- Install SSH

- Install HomeBrew

- Install Hadoop through HomeBrew

- Health Check

Install Java Environment

- Open the terminal and enter java -version to check the current Java version. If no version is returned then go for the official website to install it

- After the installation is complete, Configure the environment variables of JAVA. JDK is installed in the directory /Library/Java/JavaVirtualMachines.

- Enter vim ~/.bash_profile in the terminal to configure the Java path.

- Place the following statement in the blank line export JAVA_HOME=”/Library/Java/JavaVirtualMachines/ jdk version.jdk/Contents/Home”

- Then, execute source ~/.bash_profile in the terminal to make the configuration file effective.

- Then enter the java -version in the terminal, you can see the Java version. (Similar to below)

$ java -version openjdk version "1.8.0_252" OpenJDK Runtime Environment (AdoptOpenJDK)(build 1.8.0_252-b09) OpenJDK 64-Bit Server VM (AdoptOpenJDK)(build 25.252-b09, mixed mode)

Note: In recent versions, the mac should have built-in java, but it is possible that the version will be lower, and the lower version will affect the installation of Hadoop.

Install Homebrew

Homebrew is very commonly used on mac, not much to describe, installation method

/usr/bin/ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)"

Install SSH

ssh-keygen -t rsa -P'' -f ~/.ssh/id_rsa

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

After this step open terminal and enter “ssh localhost”, you should log in without a password and that indicates your settings is successful

Install hadoop

brew install hadoop

Note: latest hadoop will be installed. In our case Hadoop 3.3.0 at the time of writing this article.

After the installation is complete, we enter “hadoop version” to view the version. If there is an information receipt, the installation is successful.

Hadoop 3.3.0

Source code repository https://gitbox.apache.org/repos/asf/hadoop.git -r aa96f1871bfd858f9bac59cf2a81ec470da649af Compiled by brahma on 2020-07-06T18:44Z Compiled with protoc 3.7.1 From source with checksum 5dc29b802d6ccd77b262ef9d04d19c4 This command was run using /usr/local/Cellar/hadoop/3.3.0/libexec/share/hadoop/common/hadoop-common-3.3.0.jar

Hadoop configuration

Please make the changes with the below configuration details to the following files under $HADOOP_HOME/etc/hadoop/ to set HDFS.

- core-site.xml

- hdfs-site.xml

- mapred-site.xml

- yarn-site.xml

- hadoop-env.sh

Get the configuration variables in ~/.bash_profile file in $HOME directory

## JAVA env variablesexport JAVA_HOME="/Library/Java/JavaVirtualMachines/adoptopenjdk-8.jdk/Contents/Home"

export PATH=$PATH:$JAVA_HOME/bin

## HADOOP env variables

export HADOOP_HOME="/usr/local/Cellar/hadoop/3.3.0/libexec"

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

export HADOOP_CLASSPATH=${JAVA_HOME}/lib/tools.jar

## HIVE env variables

export HIVE_HOME=/usr/local/Cellar/hive/3.1.2_3/libexec

export PATH=$PATH:/$HIVE_HOME/bin

## MySQL ENV

export PATH=$PATH:/usr/local/mysql-8.0.12-macos10.13-x86_64/binHadoop Installed Path

/usr/local/Cellar/hadoop/3.3.0/libexec/etc/hadoop

Core-site.xml

Open $HADOOP_HOME/etc/hadoop/Core-site.xml in terminal and add below properties

<configuration> <property> <name>fs.defaultFS</name> <value>hdfs://localhost:9000</value> </property> </configuration>

Hdfs-site.xml

Open $HADOOP_HOME/etc/hadoop/Hdfs-site.xml file in terminal and add below properties

<configuration> <property> <name>dfs.replication</name> <value>1</value> </property> </configuration>

yarn-site.xml

Open $HADOOP_HOME/etc/hadoop/yarn-site.xml file and add below properties

<configuration> <!-- Site specific YARN configuration properties --><property> <name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value> </property> <property> <name>yarn.nodemanager.env-whitelist</name><value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value> </property> </configuration>

Mapred-site.xml

Open $HADOOP_HOME/etc/hadoop/mapred-site.xml file in termial and add below properties.

<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <property> <name>mapreduce.application.classpath</name> <value>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*:$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*</value> </property> </configuration>

hadoop-env.sh

Open $HADOOP_HOME/etc/hadoop/hadoop-env.sh file in terminal and add below properties

# export JAVA_HOME [Same as in .profile file]

export JAVA_HOME=/Library/Java/JavaVirtualMachines/jdk1.8.0_141.jdk/Contents/Home

hdfs format

$HADOOP_HOME/bin/hdfs namenode -format

Note: Open terminal and Initialize Hadoop cluster by formatting HDFS directory

Final Step

Run start-all.sh in the sbin folder

cd $HADOOP_HOME/sbin/start-all.sh

Starting namenodes on [localhost] Starting datanodes Starting secondary namenodes [K9-MAC-061.local] Starting resourcemanager Starting nodemanagers

Use JPS command to check if all name node, Data node, resource manager is started successfully

4929 DataNode 5294 NodeManager 5200 ResourceManager 5354 Jps 5046 SecondaryNameNode 4831 NameNode

How to Access Hadoop web interfaces (Hadoop Health)

NameNode : http://localhost:9870 NodeManager : http://localhost:8042 Resource Manager (Yarn) : http://localhost:8088/cluster

Running Basic HDFS Command

hadoop fs -mkdir -p /sales/data hadoop fs -put /Users/raghunathan.bakkianathan/Work/testData.csv /sales/data hadoop fs -ls /sales/data

Thank you SO MUCH

searched for so long and this post has saved me 🙂

You’re very welcome! I’m glad to hear that the post was helpful to you.