Load balancing refers to either software or hardware that distributes the workloads (distribution of data) among multiple computing instances (servers) in order to improve the performance and reliability of websites, services, applications and databases.

Load balancing is mainly focused to reduce the pressure of a single server as much as possible through horizontal expansion. We have many options to implement load balancers, like F5, Nginx, HAProxy, and service of various cloud providers such as AWS ELB, Google Load balancer.

Benefits of load balancing

Let us see what are the benefits of using a load balancer.

- Performance: Load balancing technology distributes the business more evenly to multiple resources, thereby improving the performance of the entire system.

- Scalability: Load balancers can easily scale to meet the demands of traffic spikes (black friday, cyber monday … etc) and meet the growing business needs without reducing the quality of the business;

- Reliability: Load balancer will effectively route the traffic to multiple devices hosted anywhere in the cloud environment in case of resource failures and crashes.

Why do we need Load Balancing?

In the early days, traditional distributed systems reside on a single server (1:1 relationship between application and backend), so business logic and traffic was relatively small and simple, and a single server could meet basic needs.

But single server is not efficient to support increasing business traffic, so eventually applications are deployed in multiple servers to scale the performance to avoid single-point failures.

Problem 1: How to distribute the traffic of different users to different servers.

Answer 1.b: Load balancer comes to picture to solve the problem. The client’s traffic will first reach the load balancing server and the request will be distributed to different application servers through a certain Load balancing scheduling algorithm. At the same time, the load balancing server will also perform periodic health checks on the application server. When a faulty node is found It will dynamically remove nodes from the application server cluster to ensure high availability of applications

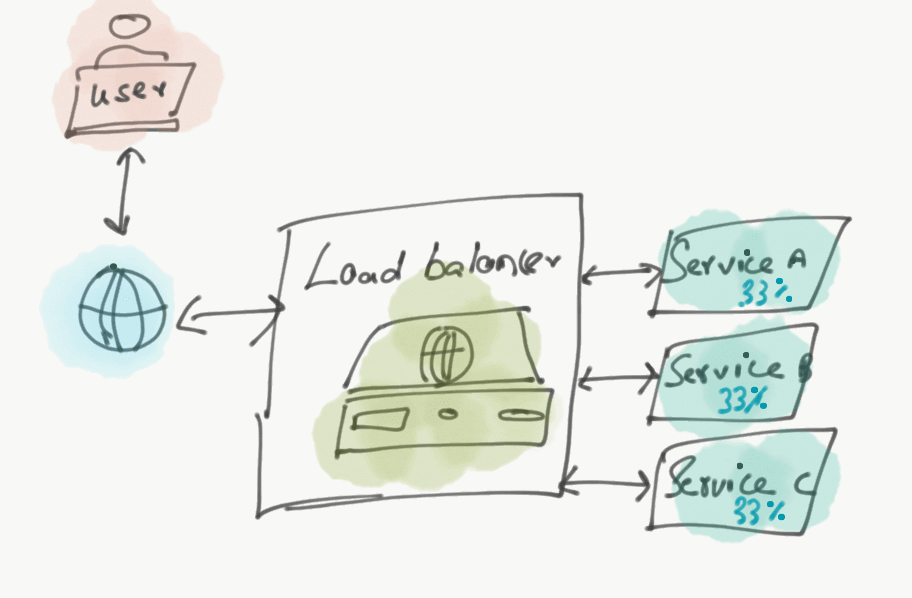

Layers of load balancing

Load balancing is divided into Layer 4 load balancing and Layer 7 load balancing. Layer 4 main work is forwarding (TCP/UDP). After receiving the client’s traffic, it simply forwards the network packets to the application server without inspecting the content of the packets.

Layer 7 load balancing works at the application layer of the OSI model. Unlike layer 4, Layer 7 needs to analyze application-layer traffic, after receiving the client traffic, it reads the message and makes a decision based on the content of the message and after that it establishes another connection with the application server (TCP connection) and sends the request to the server.

Common Load balancing Algorithms

The load balancer generally decides which server to forward the request to based on two factors.

First, ascertain that the selected upstream server can respond to the request, and then select from the healthy pool according to the pre-configured rules.

Because the load balancer should only select back-end servers that can respond, normally there needs to be a way to determine whether the back-end servers are “healthy”.

In order to monitor the running status of the backend server, the health check service will periodically try to connect to the backend server using the protocol and port defined by the forwarding rule.

If the upstream server fails the health check, it will be dynamically removed from the pool to ensure that live traffic will not be forwarded to the upstream server until it passes the health check again.

Common load balancing Algorithms

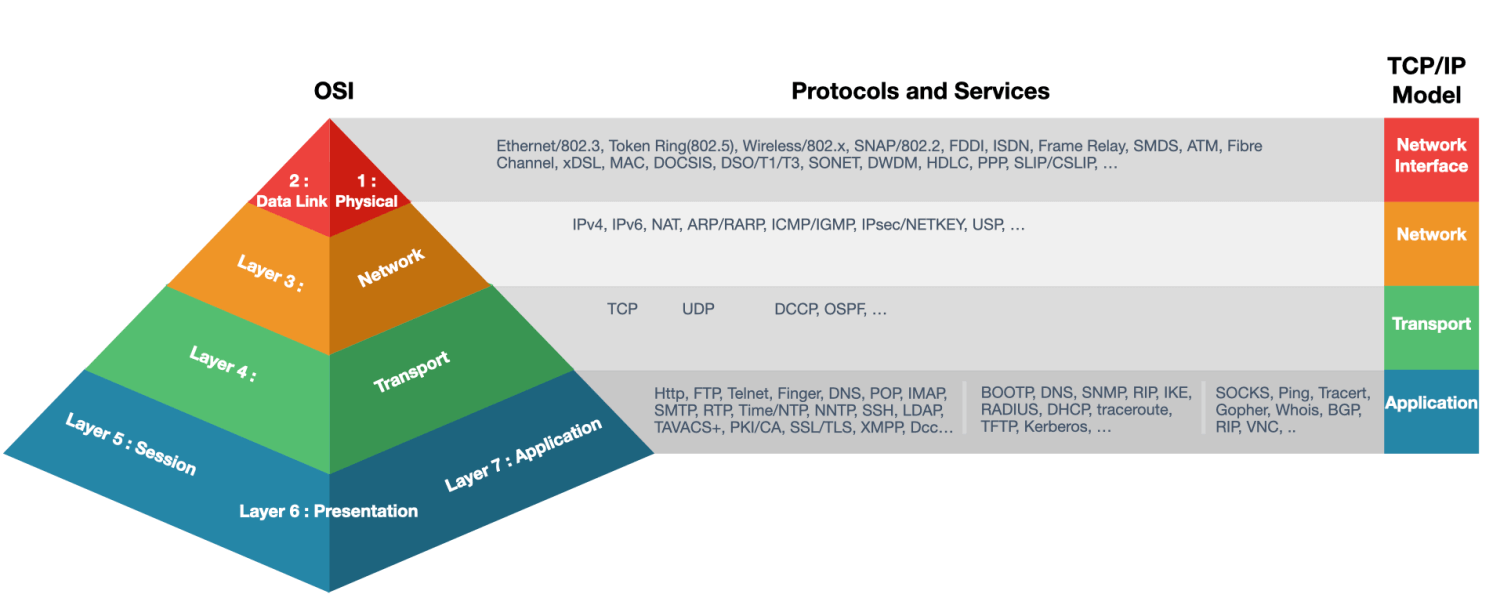

- Round Robin

is the default load balancer policy.

There are two types of the round-robin.

i) Weighted Round Robin

ii) Dynamic Round Robin.

When the load balancer receives a request, it assigns the request to the first server in the list and repeats the list in the same order when all the server receives a connection.

(i) The Weighted round-robin algorithm adds weight to each server in the round. For example, Server 1 has weight 1, server 2 has weight 2, and so on. Typical order would be 1-2-2-3-2-3-3. The weighted round-robin will route requests based on preassigned server weights.

(ii) Dynamic round robin will perform real-time calculation and based on the server weights it will route the request. - Least Connections

is used for communicating with the server with the least number of connections (sessions) among multiple servers by considering the number of active and current connections to each server.

- Weighted Least Connection

adds weight to each server in the least connection algorithm. The algorithm allocates the number of connections to each server in advance, and forwards client requests to the server with the least number of connections on.

- Least Response Time

Similar to the least connections method, the least response time method assigns requests based on both the fewest active connections and lowest average response time.

- Least Bandwidth Method

In the least bandwidth method, the request will be routed to (serving least amount of traffic) servers based on the bandwidth consumption which are measured in megabits per second (Mbps)

- IP Hash

An algorithm that uniformly forwards packets from the same sender (or packets sent to the same destination) to the same server by managing the hash of the sender’s IP and destination IP addresses. As long as the server is available the load balancer routes requests from the same client to the same backend server.

My Two cents

While implementing load balancer please consider the below set of points explained at a high level from my experience.

Refer this article on service discovery which will help services to discover each other on the network.

i) Health Check

Make sure when the service is taken out by the load balancer or when the health check fails. It should undergo multiple, successive polls to ensure that an instance is not put back into the live traffic before it is ready to support traffic. Meaning, validation is not meant for only application, but check all the backend services are functioning well, and the deeper the validation can go, the more reliable the entire service will be.

ii) DNS integrated global load balancing

DNS integrated global load balancing is required for applications that require a higher level of uptime. This provides the ability to distribute the client request to group of subnets, regions, zones, and/or servers. The DNS-enabled global load balancer should be able to perform the health checks for load balancers which are connected under GLB and using relevant Virtual IP it should redirect to active servers.

Conclusion

Load balancing is used to distribute load among multiple clusters and other resources to optimize resource usage, maximize throughput, and minimize response time while avoiding overload. The load balancer is the key component in microservice architecture and it is mainly required to

- Build Scalable services.

- Improve the throughput and decrease latency

- Avoid overloading a single backend.

- Allows updating service on the fly