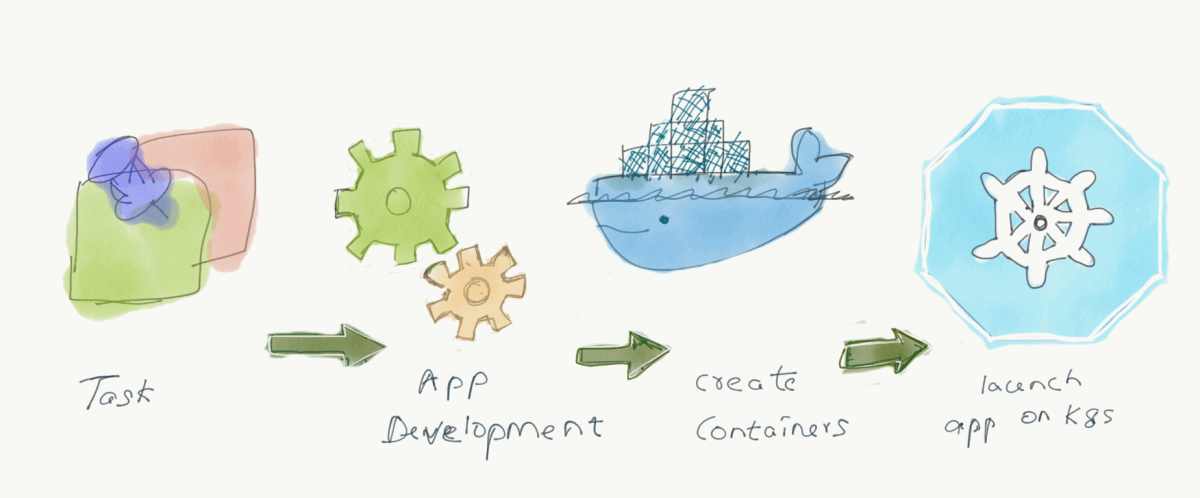

Summary: Kubernetes is an open-source version of Borg. It is a container orchestration platform that automates the deployment, scaling, management, and networking of containers. It has been open-sourced since 2014 and primarily developed by Google using the Go Programming Language. Many engineers are now engaged in system research on K8S to promote the development of K8S. In this article, I have explained the Kubernetes architecture diagram in detail.

Read: Introduction to Kubernetes

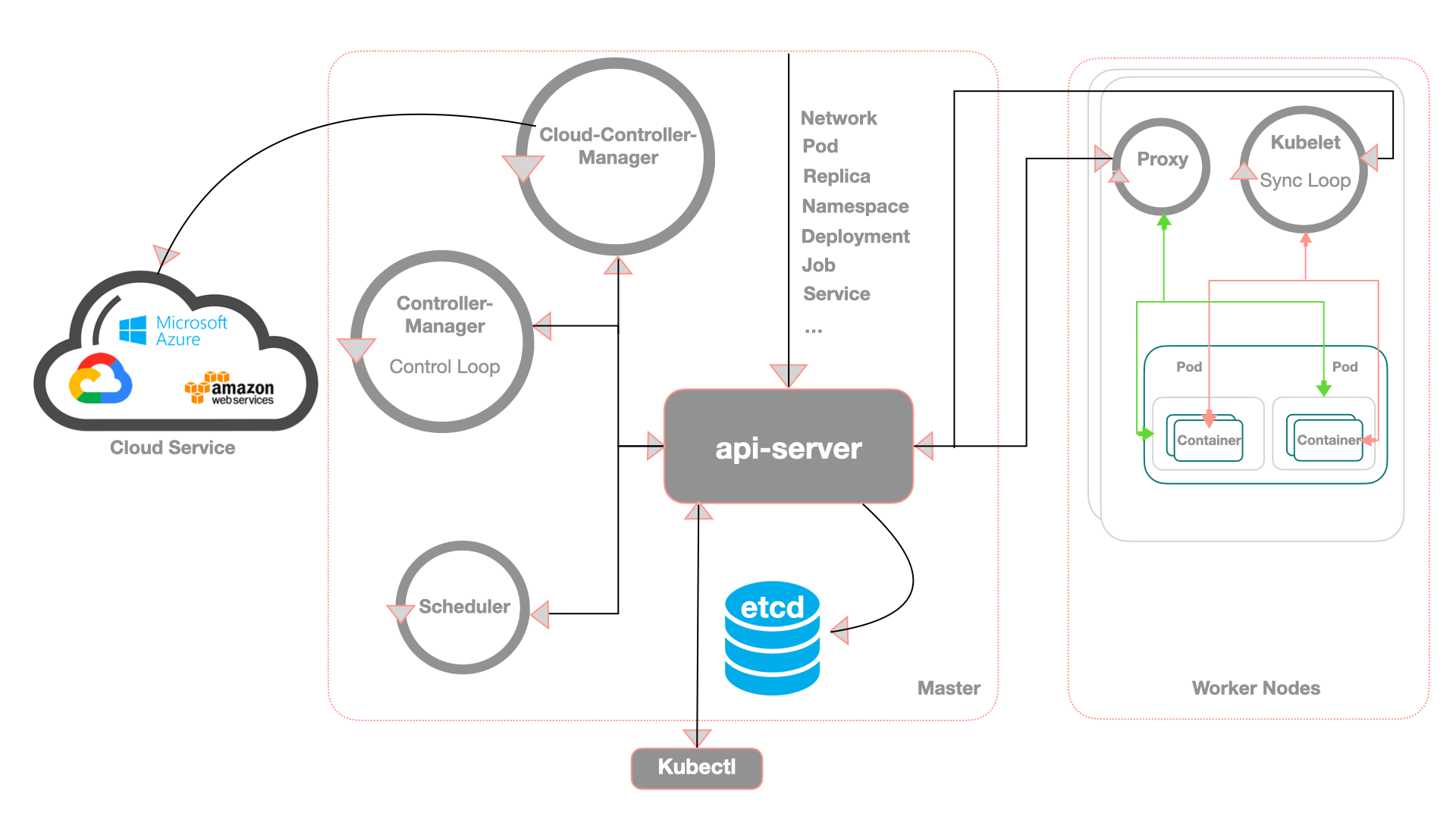

Kubernetes architecture explained

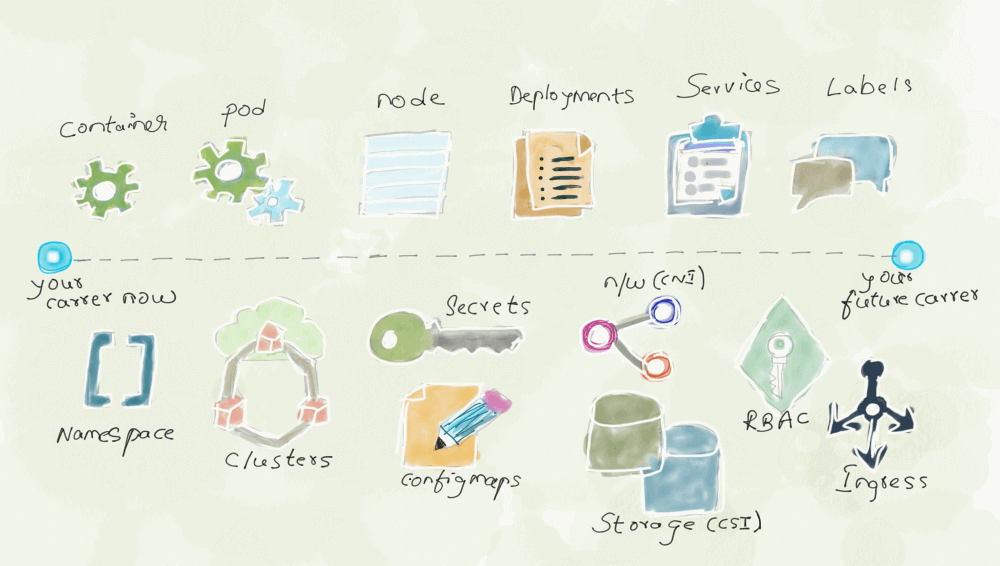

Kubernetes (follows client–server architecture) architecture is composed of a master node (a.k.a Control Plane) and a set of worker nodes.

The Master Node refers to the control node in the kubernetes cluster. In each cluster there should be one master responsible for management and control of the entire cluster. Its major role is to schedule work across the worker nodes. The primary components that exist on the master node are the Api server, Controller Manager, Etcd, Cloud Controller Manager, etc .. (explained briefly below)

Note: In K8 the work that is scheduled is called a POD, and the pod can hold one or more containers.

The nodes can be either physical servers or virtual machines (VMs). Users of the Kubernetes environment interact with the master node using either a command-line interface (kubectl), an application programming interface (API), or a graphical user interface (GUI).

Kubernetes has two goals: to be a cluster manager and a resource manager, Kubernetes uses a master to worker node model, which means that worker nodes are scalable and usable. The Kubernetes architecture can provide different worker node sizes for different workloads, so the resource manager part will find a suitable location in your cluster to perform work.

Note: Each Kubernetes cluster includes a master node and at least one worker node. (A cluster usually contains multiple worker nodes).

Below are the control plane and node components that are tied together in a Kubernetes cluster. (Refer to Kubernetes architecture diagram above)

Control Plane component

Master node provides a running environment for the control plane, which helps to manage the state of the cluster. The control plane components play a very distinct role in cluster management.

Note: It is important to keep running the control plane at all costs. Losing the control plane may introduce the downtimes and causing service distraction to clients with possible loss of business.

To ensure the control plane is fault-tolerant, Master nodes should be configured in high availability mode. Only one of the master nodes actively handles all clusters, the control plane components stay in sync across all the master node replicas. This type of configuration adds resiliency to the cluster control plane, If an active master replica fails the other replica takes up and continues the operations of the Kubernetes cluster without any downtime. Generally these things are taken care of in the Managed version of Kubernetes.

The primary components that exist on the master node are

- Api server

- Controller Manager

- Etcd

- Cloud Controller Manager

- Scheduler

API server

All administrative tasks are coordinated by the Kube API server (central control plane component) by the master node. API server intercepts the call from the user, operator, and external agents, then validates and processes the system. During the processing, the API server reads the Kubernetes cluster current state from etcd and after the execution of the call, the resulting state of the cluster is just saved into a distributed key-value data store for persistence.

The API server is the only master plane component to talk to etcd, both to read and write the cluster state information and acting as the middleman for any other control plane agent.

Etcd

Etcd is a distributed key-value data store used to persist only cluster state-related workload data. Data is compacted periodically to minimize the size of the data store and it is not deleted. etcd is inbuilt in all managed Kubernetes.

Scheduler

The role of a scheduler is to assign new objects such as pods to nodes, during the scheduler process, the decisions are made based on the current cluster state and new object requirements. The scheduler obtains resource usage data for each worker node in the cluster and new object requirements which are part of its configuration data from etcd via the API server.

The scheduler also takes into account quality and services, data locality, affinity, taints, and toleration, etc..

Control manager

Running controllers to regulate the state of the cluster. Controllers are watch loops that will continuously run to check the cluster desired state with its current state in case of mismatch, the corrective action is taken in the cluster and until the current state matches the desired state.

Kube controller manager

Runs a controller that watches the shared state of the cluster through the API server and matches the current state with the desired state. Examples include the replication controller, endpoints controller, namespace controller, and service accounts controller. All the controllers are bundled into a single process to reduce complexity.

Cloud controller manager

Controllers responsible to interact with the underlying infra of the cloud provider for support of availability zones, manage storage volumes and load balancing, and routing.

Worker nodes

Worker nodes provide a running environment for client application through containerized microservice, the applications are encapsulated in pods which are controlled by cluster Control Plane agents running on the master node.

Pods are scheduled on worker nodes where they find required compute, memory, storage resources and networking to talk to the outside world. The pod is the smallest scheduling unit in Kubernetes. It is a logical collection of one or more containers which is scheduled together.

To access applications from the external world. We should communicate with the master node not with the worker node. A worker node has the following components

- Container Runtime

- kubelet

- Kube-proxy

Container Runtime

Container runtime is responsible for the real operation of pods and containers and image management.

kubelet

Kubelet runs on each node in the cluster and communicates with the control plane components from the master node. It receives pod definitions primarily from the API server and interacts with container run time to run containers associated with the pod. It maintains the lifecycle of containers.

Kube-proxy

Kubeproxy is a network agent, which runs on each node responsible for dynamic updates and maintenance of all networking rules on the node.

My Two Cents

- Enable Docker Image Security: Do It Continuously, often, and automate scanning of container images for known security vulnerabilities.

- Use lightweight docker images. Refer Distroless Images, Enable Docker Content Trust

- Run containers with non-root user privileges. Refer Dockerfile tips for production

- Follow microservices design patterns: for example, make sure you are running one process per container.

- Last but not least, don’t adopt any new technology because it is a cool thing; if you don’t have the exact use case or scenario; don’t use it for the sake of using it :). You are likely to fail big time

Conclusion

Kubernetes has been widely used in the container field in recent years as a typical solution for containerized deployment and implementation. Please refer to the official website for more details. In Managed Kubernetes service (GKE, AKS, EKS) Master node is managed by the service provider, Worker node is managed by users. But in open source, both Master and Worker nodes are managed by the user.