What does Kubernetes do: Kubernetes is a platform for managing multiple containers running across multiple hosts. Like containers, it is designed to run anywhere, including on-prem, private, public cloud, and even in the hybrid cloud. In this article, I have tried explaining – what is Kubernetes in simple words and why use it?

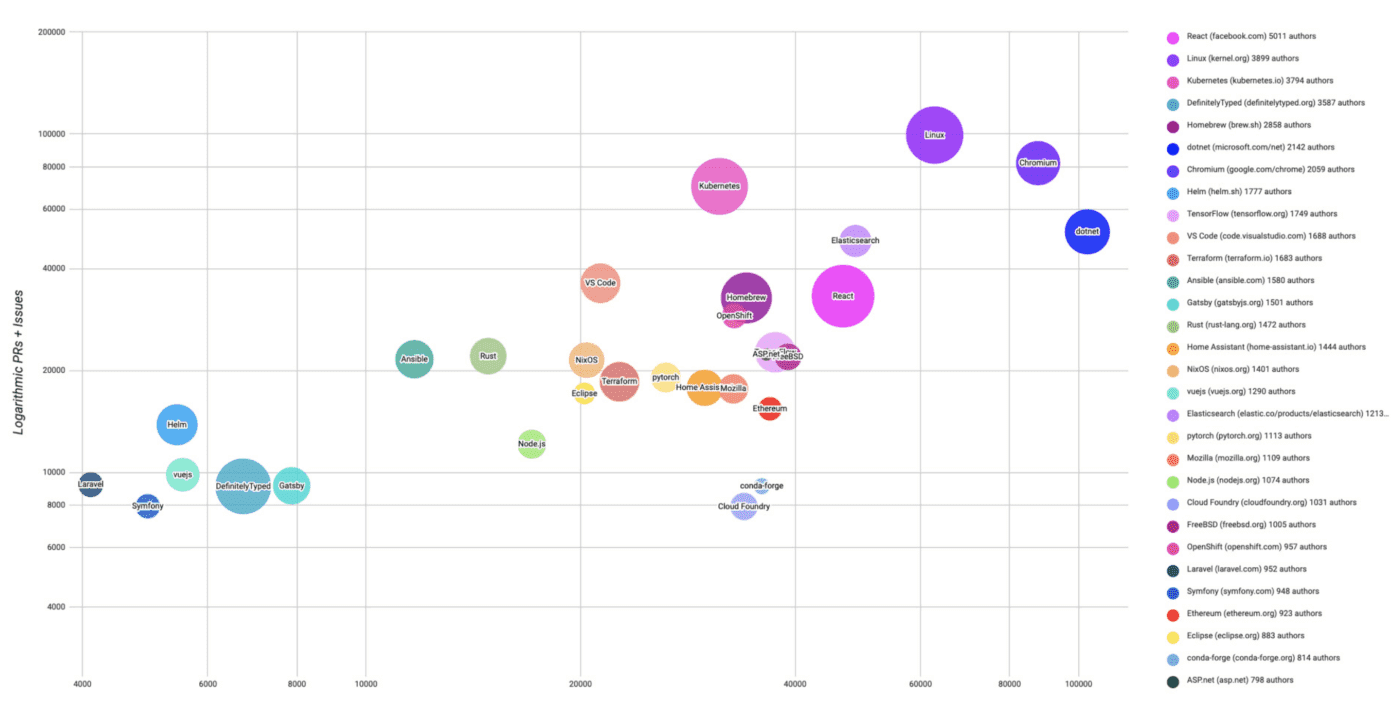

Kubernetes was originally created by the Google Borg/Omega team. It is one of the most popular open-source projects in history and has become a leader in the field of container orchestration.

The below infographic (bubble chart) will show the top 30 highest velocity open source projects as of June 2019 and Kubernetes hold 3rd position in the list.

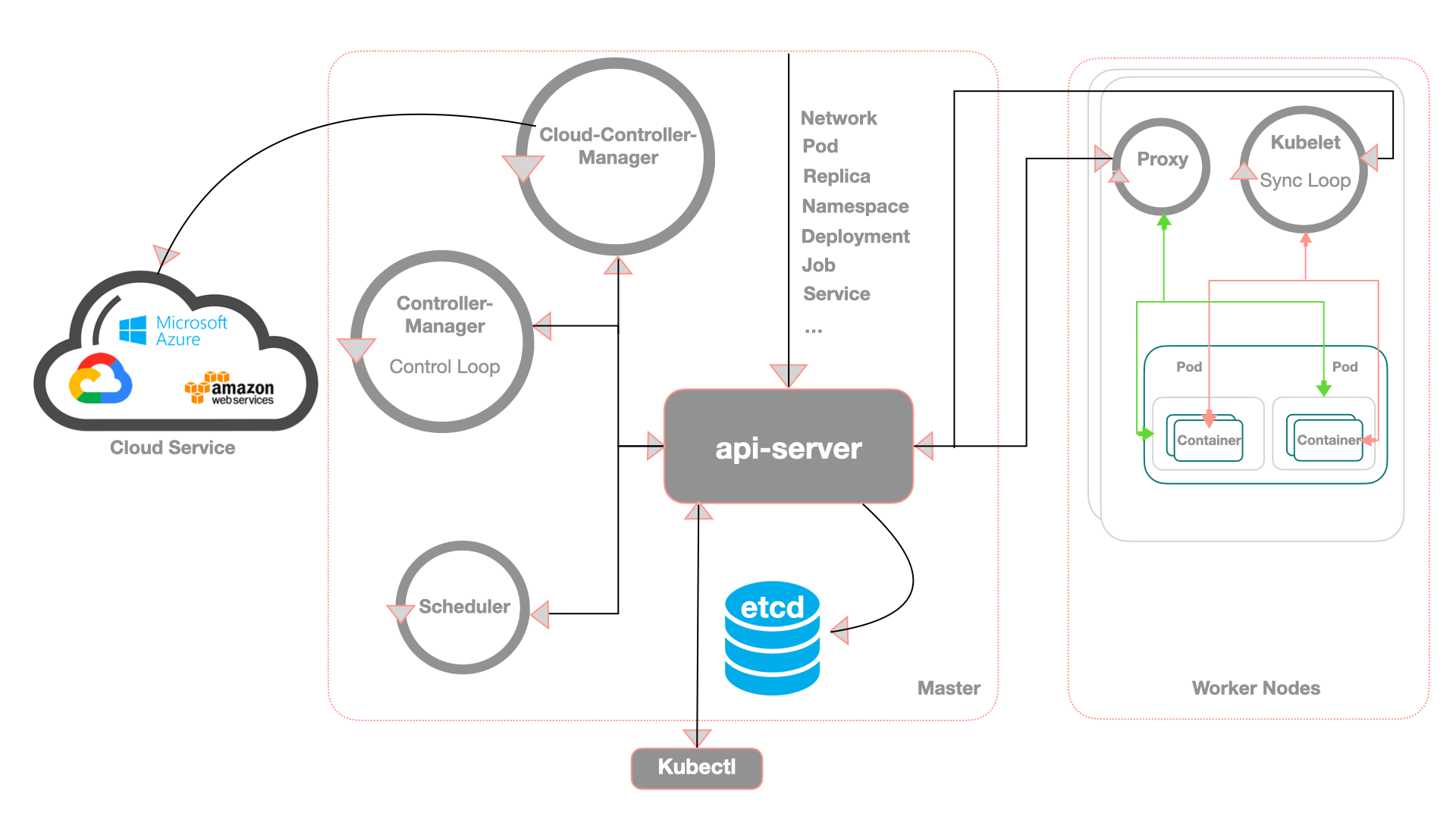

Read: Kubernetes Architecture Components

Before getting into the Kubernetes, we will see some of the operational complexities of managing traditional deployment in the early days.

Traditional Deployment era

Before the Kubernetes era, the traditional software applications were designed as monolithic, and deployment used to happen on the physical servers and there was no way to define the boundaries with respect to the resources used by applications.

Physical servers setup is only capable of serving a single business, as the resources of physical servers cannot be distributed among different digital tenants. So naturally, there was agreed downtime, and availability wasn’t a requirement in the early days.

Virtualized deployment era

In the virtualized deployment era, single/multiple virtual machines are used for deploying the applications. This helped a lot to isolate the application from each other with the defined (resource limit – cpu/memory) boundary.

Though it provides complete isolation from the host OS and other VM’s. The virtualization layer has a striking negative effect on performance and virtualized workloads run about 30% slower than the equivalent containers. But this is useful when a strong security boundary is critical.

Container deployment ERA

Containers are considered to be lightweight. Containers have their own file system, CPU, memory, process space, and can run directly on the real cpu, with no virtualization overhead, just as ordinary binary executables do.

Containers basically decoupled from underlying infrastructure and can be ported into different cloud and OS distributions. Also, container runtime will efficiently use the disk space and network bandwidth because it will assemble all the layers and only download a layer if it is not already cached locally.

Advantages of Containers

- Agile app creation and deployment: Easier and efficient to create a container image compared to VM image.

- Continuous deployment and integration: Deployment is quick and easy rollback

- Dev and Ops separation of concern: Create application container images at build or release time rather than deployment time; nothing but decoupling the images from infrastructure.

- Observability: Application health and other metrics can be observed.

Note: Container invented in the 1950s, a truck driver named Malcolm McLean proposed the idea of containers, So instead of tediously unloading goods individually from the truck trailers (Heavy metal box with wheels) onto ships and trucks as part of their voyage. They came up with an idea to separate metal boxes from truck wheels which will be easy to load, unload, stack or lift to another ship or truck. Eventually, the tech industry borrowed the same idea from the shipping industry.

Microservices

Microservices – Lightweight, designing small, isolated functions that can be tested, deployed, managed completely independent. Lets developers write the application in various languages and In addition to the code, it includes libraries, dependencies, and environment requirements. Microservice architecture helps developers to take ownership of their part of the system, from design to delivery and ongoing operations. Major companies like Amazon, Netflix, etc.. had significant success in building their systems around microservices.

Without containers, we cannot end the talk of microservices. Though they both are not the same thing, because a microservice may run in a container as well as in a fully provisioned VM. Similarly, a container doesn’t have to be used for microservices. In the real world, microservices and containers enable developers to build and manage applications more easily.

Container images

Container image is a compiled version of a docker file that is built up from a series of read-only layers. It bundles application with all the dependencies and a container is deployed from the container image offering an isolated execution environment for the application.

You can have as many as running containers of the same image and it can be deployed on many platforms, such as Virtual Machine, Public Cloud, Private Cloud, and Hybrid Cloud.

Container Orchestration

▌Most container orchestration can group hosts together while creating clusters and schedule containers on the cluster, based on resource availability.

▌Container orchestrator enables containers in a cluster to communicate with each other, regardless of the host where they are deployed.

▌Allows to manage and optimize resource usage.

▌It simplifies access to containerized applications, by creating a level of abstraction between the container and the user. It also manages and optimizes resource usage and they also allow for the implementation of policies to secure access to applications running inside the container.

▌With all these features, container orchestrators are the best choice when it comes to managing containerized applications. Most container orchestrators refer below, can be deployed on bare metal servers, public cloud, private cloud, etc… and in short, the infrastructure of our choice (Example: We can spin up Kubernetes in cloud providers like AKS, EKS, GKE, Company data center, workstation, etc…).

Some more benefits of container orchestration include,

- Efficient resource management.

- Seamless scaling of services.

- High availability.

- Low operational overhead at scale.

Few container orchestration tools in the market today

- Amazon ECS

- Docker Platform

- Google GKE

- Azure Kubernetes Service

- Openshift Container Platform

- Oracle Container Engine for Kubernetes

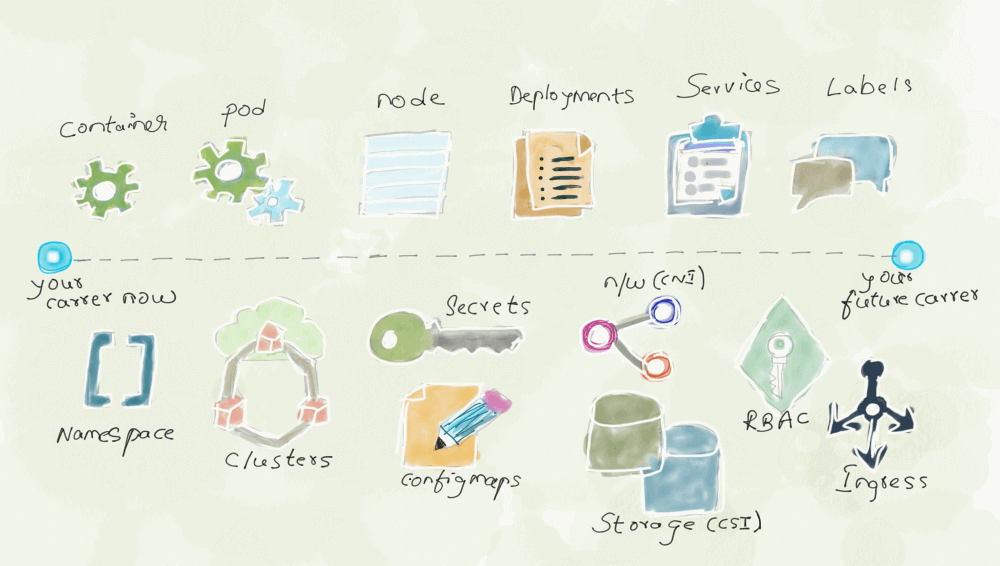

What is Kubernetes?

Define Kubernetes: As per Official documentation

Kubernetes, also known as K8s, is an open-source system for automating deployment, scaling, and management of containerized applications.

Kubernetes main goal is to take care of cluster management and orchestration. It has very rich set of abstract and effective software primitives that automate the functions of computing, storage, networking, and other infrastructure services

The key features of Kubernetes include:

- Automatic bin packing: You can tell Kubernetes how much CPU and Memory each container needs and Kubernetes can fit containers in the node accordingly and use the resources in an optimal way.

- Self – Healing: Kubernetes ensures the containers are automatically restarted when it goes down. In case the entire node goes fails, it replaces and reschedules the containers to another node.

- HPA: Application are scaled horizontally or manually using custom metrics utilization

- Service discovery and Load Balancing: Containers receive their own IP address from Kubernetes. While it assigns a single DNS name for a set of containers to aid in load balancing requests across the containers.

- Automatic rollbacks and rollout: Kubernetes gradually rollout, rollback updates, and config changes to the application. It constantly monitors application health to prevent any downtime and if something goes wrong, Kubernetes will roll back the change for you.

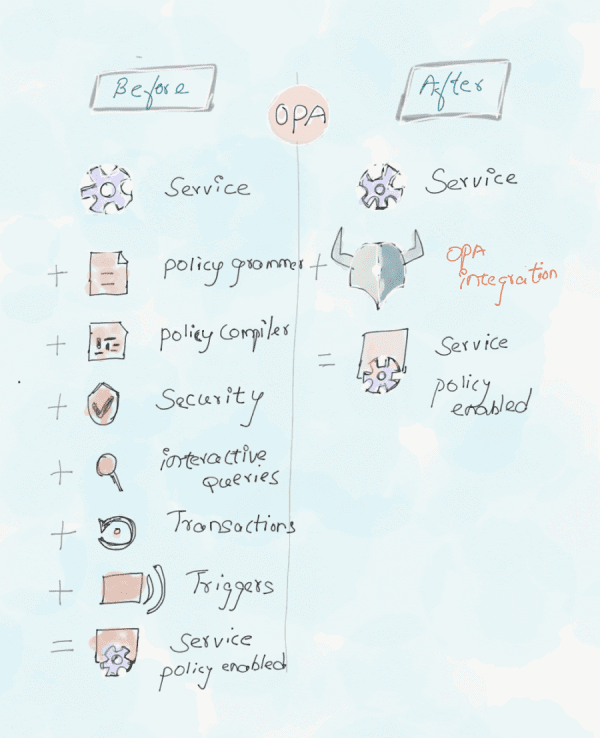

- Secret and config management: Kubernetes manages secrets for an application separately from the container images, to avoid rebuilding the container every time.

- Storage Orchestration: Kubernetes allows you to automatically mount the storage of your choices like local storage or public storage and many more

- Batch execution: Kubernetes can manage your long-running Jobs and replace failed containers if desired.

Docker and (vs) Kubernetes

Do you still have confusion on how Docker and (vs) Kubernetes work together?

Simple, Docker allows you to “create” containers, and Kubernetes helps you to “manage” containers. Use Docker to pack and ship your application & dependencies. Use Kubernetes to deploy and scale your application.

You need a container runtime like Docker Engine to start and stop containers on a host and when you have a bunch of containers running across a bunch of hosts, you need an orchestrator to manage things like:

- Where will the next container start?

- How do you make a container scalable based on CPU/Memory?

- How do you manage which containers can communicate with other containers?

- How do you restart the failed container, replace and reschedule containers when the node dies.

That’s where Kubernetes comes in to picture.

Why use Kubernetes?

Kubernetes has been adopted by many companies because it has become inevitable to move from a single machine to a cluster. The vigorous development of cloud computing is accelerating this process. Below are some of the aspects which will help us understand why we have to use Kubernetes for our workloads.

One Platform handles everything

One Platform to manage everything. With Kubernetes, deploying any application is very easy. In general, if an application can be packaged in a container, Kubernetes will definitely be able to run it.

Regardless of the application written in any language or framework (Java, Angular, Node.js), Kubernetes can run it in any environment, be it physical servers, virtual machines, or cloud environments.

Seamless migration of cloud environment

Kubernetes is Cloud Agnostic. Have you ever tried migration of workloads from, say, AWS to GCP or Azure? With Kubernetes, it is compatible with various cloud providers such as GCP, AWS, Azure, Openstack, and on-premise. We can move workloads without having to redesign the applications, without worrying about the specs of your cloud provider.

Efficient use of resources

Kubernetes if it found that nodes are not running at full capacity, Kubernetes reallocates pods to another node (Automatic bin packing) and shutdown unused nodes, and uses the resources in an optimal way.

Out-of-the-box auto-scaling capability

Kubernetes can scale applications based on the CPU/Memory and using custom metrics. As of today, Kubernetes supports different types of scaling.

- Horizontal pod autoscaler – can scale up or down the pods (also adding new nodes to a cluster) automatically based on observed metrics like CPU, memory, and custom metrics. For example, when we get a spike or traffic burst in the user traffic request, Kubernetes can increase or decrease the number of pod replicas to serve the traffic request.

- We have Vertical pod autoscaler – can dynamically add CPU or memory to pods of each node in the cluster based on the application needs. It will monitor the performance of the workloads over time and recommend and apply the optimal resource requirements for workloads.

- Cluster Autoscaler increases or decreases the size of the nodes to a cluster whenever it finds pending pods (Resource shortages) and utilization of a node falls below the threshold defined.

Make CI/CD easier

Kubernetes makes the CI / CD process less painful. There are a number of options available in the market (Jenkins, CircleCI .. etc) that can help to automate continuous integration and delivery pipeline to the Kubernetes cluster.

In addition, you no need to be knowledgeable either in Ansible or chef, just try with a simple script to create a new deployment/pods and deployed the container workloads in Kubernetes cluster using Continuous Integration service. It is as simple as that.

So the application is packaged in a container that can be safely launched anywhere, from your Laptop, a Cloud server, making them easy to test.

Reliability

An important reason for the popularity of Kubernetes is, the applications will run smoothly without any interruption by a pod or entire node failures. In the event of the failure, Kubernetes will take care of distributing application images to the healthy available pods or nodes until the system is restored.

What problem does Kubernetes solve?

Problem statement

- Code deployments and patches need to be rolled out and rolled back multiple times in a known control way.

- Need to test the software more frequently and get the feedback quickly from that testing.

- Business needs application and services to be available 24/7

- Meet the business demands on traffic spikes in holiday season like (Black Friday, Cyber Monday … etc)

- Reduced cost for cloud infrastructure for the off-peak/peak holiday season

Resolution

- All those problems can be solved using Kubernetes.

- We can have the CICD built into Kubernetes. So that we can distribute the load and run as many builds in parallel and scale in/out based on the load.

- A/b – Canary, Blue-green, and different mechanisms allow you to deploy code quickly and get feedback from the users. If everything is good, we can promote the artifacts to the next stage (full-blown deployment) otherwise rollback to the older version.

- For availability, get a managed Kubernetes platform from top cloud providers like AWS, Google Cloud & Azure. Where EKS guarantees overall – 99.95%, with availability zones enabled – 99.95% and 99.9% when availability zones disabled. Similarly, GKE provides – 99.5% uptime for zonal deployments and – 99.95% for regional deployments.

- Kubernetes can scale applications based on metrics (Cpu utilization/Custom metrics – Request per second) using Horizontal Pod Autoscaler. In short, HPA adds and deletes replicas and can support sudden bursts in traffic and spikes during events like black friday, cyber monday, etc … with auto-scaling enabled.

- Kubernetes designed to run anywhere and the business can be on, Public, Private or Hybrid cloud.

- Kubernetes will keep your ops cost low and developers productive. It supports all new types of applications these days and it is really powerful platform not only for today’s applications but for future applications also.

Why type of applications can run in Kubernetes?

Kubernetes is a great platform for building platforms, meaning – Kubernetes helps you to manage underline infrastructure and helps you scale infrastructure and scale cloud infrastructure. We can build Platform as Service, Serverless, Function as a service, Software as a service on top of Kubernetes.

Kubernetes does not bound itself down, with any dependencies or limitations on which languages and applications it supports. If an application can run successfully in a container, it should run in Kubernetes also. Below are a wide variety of workloads supported by Kubernetes.

- Stateless

- Stateful apps

- Big Data and Machine Learning workloads.

- Microservice workloads.

Conclusion

Kubernetes is a tool to manage multiple container running applications. Years back google was running all of its services like Gmail, Google Maps, Google search, and so on, in containers. Since there was no suitable orchestration available at that time, Google was forced to invent one named (Borg). Based on the learning so far and the challenges faced with the internal container orchestration, google finally found an open-source project in 2014 named Kubernetes.